A brain implant and Artificial Intelligence enabled a paralyzed woman to communicate again after suffering a stroke in 2005. This example demonstrates one of the most beautiful applications of AI in healthcare and has the potential to change the lives of millions.

No doubt, AI will transform the lives of many people. What this means in one of the most positive ways imaginable has been demonstrated by researchers from UC San Francisco and UC Berkeley. They allowed a paralyzed woman to finally talk again as she last did almost two decades ago.

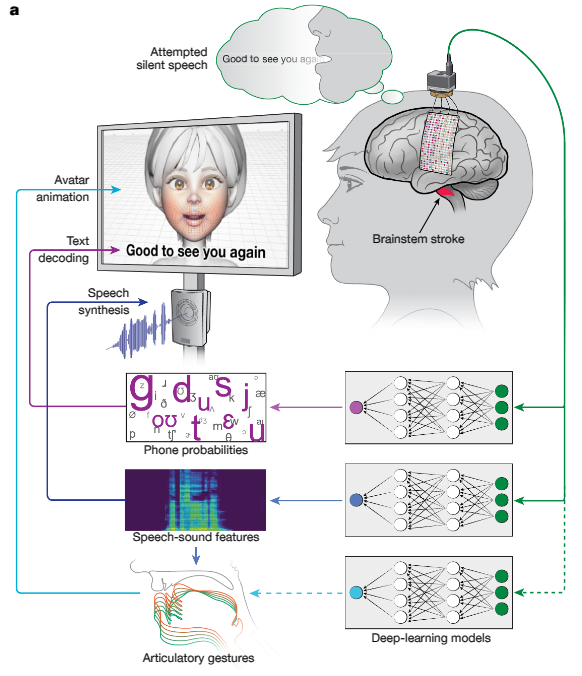

Thanks to an implant and deep learning algorithms, brain signals are captured as well as translated into words, sounds, and mimics. While a study from 2021 first showed that it is possible to decode brain signals to speech, now a personal voice and facial expressions line up. This completes the nature of artificial communication and underscores the role of AI in healthcare in the future.

Living with paralysis

Before Ann’s stroke at the age of only 30, she was a high school math teacher in Canada. For the past 18 years, she’s unable to move most of the muscles in her body. The injury left her with Locked-in syndrome (LIS) which means that all five senses function normally despite the impaired connection between the brain and the body. Patients with LIS are literally locked inside their bodies while being fully aware of everything around them.

“Overnight, everything was taken from me”.

When she suffered the brainstem stroke, Ann had a husband, an 8-year-old stepson, and a 1-year-old daughter who never had the chance to hear her mother’s real voice. Today, Ann communicates by slowly typing on a screen with small movements of her head. During years of therapy, her ability to use some muscles for limited facial expressions slightly returned and was sufficient to laugh or cry. However, since she could not even use her tongue it remained impossible for her to speak.

Interestingly, the brain might still be able to send signals for speech even after a severe stroke leading to LIS. The problem is that these signals don’t reach the muscles anymore to activate them. However, these intact cognitive flows can be captured to reproduce words, sounds, and even facial expressions.

How AI reconstructs speech from brain signals

There are two steps to recreate speech from brain activity. First, a paper-thin implant containing 253 electrodes is placed on the brain’s surface. The implant covers the area responsible for speaking and detects different electric flows. These signals now need to be translated into words in a second step using deep learning algorithms.

Deep Learning recognizes words from the brain

The main goal of the algorithm is to correctly assign an input signal to a word. Specifically, the scientists used phonemes which are sub-parts of words. 39 phonemes are sufficient to form any word in English. Thus, the algorithm only needs to learn these 39 different puzzle pieces to construct every possible sentence. This made the process more accurate and faster which is essential for natural conversations.

Ann helped to train the algorithm to recognize those pieces. That means repeating her thoughts over and over again and comparing them with the predictions of the model. This method is also called supervised learning. Once the model correctly classifies the brain signals, the goal is achieved. To get there, simply thinking about a word is not enough. Instead, Ann really has to attempt to speak to induce the desired signals.

Algorithms restored her original voice

Similarly to identifying words, the sound of the voice can be recreated from cognitive signals as well. This is possible since flows within the brain differ depending on the intended emphasis. Hence, deep learning can distinguish between a signal for screaming, whispering, or talking normally.

Ann’s voice was then reconstructed based on a video that recorded her wedding speech in 2005. When she now listens to her own synthesized voice after almost two decades, she said that her brain feels funny and it’s like hearing an old friend.

Showing emotions through AI after 18 years

In addition to words and sounds, brain signals are sent to muscles responsible for facial expressions. Most of our communication does not happen on a purely informational basis but is conveyed through mimics and gestures. Therefore, facial movements are essential for engaging in natural conversations.

A digital avatar developed by Speech Graphics simulates such facial expressions based on signals from the brain. Overall, this system enables Ann not only to communicate words but also to adjust pitch and show emotions while using her original voice.

Millions of paralyzed patients will benefit from AI in healthcare

In the US alone, there are over 5.4 million patients that suffer from paralysis. Those people could receive a lot of quality back to their lives as AI in healthcare progresses in the near future. Researchers from San Francisco and Berkeley hope to obtain FDA approval for future systems that produce speech from brain signals.

However, as brain flows can be quite unique, the algorithm’s specific parameters may not generalize well across patients. Therefore, models will likely need to be retrained for different people. Nonetheless, the technology is still expected to give many people their autonomy back and enable them to participate in social interactions more conveniently.

The next steps for Ann’s system include increasing the number of words per minute from 14 to 80 and developing a wireless method. Currently, Ann is connected to the computers via a cable attached to her head. In the future, she wants to show other patients that their lives are not over and their disabilities don’t stop them from being independent and happy.

“This study has allowed me to really live while I’m still alive.”

Despite her limitations, Ann managed to become happier and bring happiness to others. If you want to learn more about happiness that most people don’t know, this article might be interesting for you:

Top 10 less known facts about happiness (in 2023)

Latest Posts

- Two ways to reveal a self-aware or conscious AI

- Can we visit our neighbor galaxy, Andromeda?

- Top 10 Fascinating AI Applications in 2023

- The Double-Slit Experiment: Everything from A-Z

- AI in Healthcare: Paralyzed Woman speaks again (after 18 years)

Source: Boris Dayma, Xintao Wang, Liangbin Xie et al., Wikimedia - Future Mobility: 3 Reasons for drastic Change (except climate)